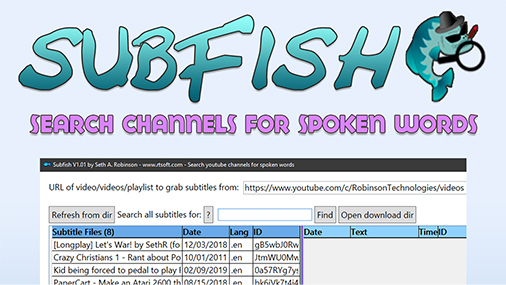

Features:

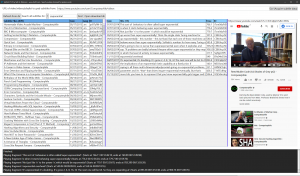

- Download all subtitles from a channel or playlist

- Search subtitles for keywords (regex supported)

- Export video timeline of all found clips as .EDL for Premiere or DaVinci Resolve

- Will notify you on startup if a newer version is available

- Full source code on github so feel free to submit patches, report bugs or make feature requests.

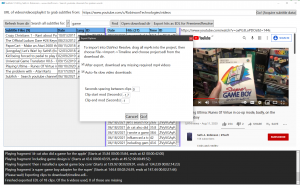

Here’s an example of exporting a lot of clips at once based on finding a single word and using Resolve’s scripting to automatically add the count, date, and youtube video title each clip is from. (the only “work” I had to do was hand nudge the clips forward and back a little so only the correct word was heard instead of a few seconds before and after as well)

Download/install instructions (Windows)

To run Subfish, you need to download some Windows libraries from Microsoft first because I’m too lazy to make an installer for now. Requires Windows 7 or newer.

1. Install the .NET 5.0 Desktop Runtime. (Windows version is here)

2. Install the WebView2 runtime (Try here, look for x64 version probably).

3. Download the latest version of Subfish (in a zip) for Windows.

Inside the zip there is a folder called “Subfish”. Drag that folder onto your desktop (or somewhere) to extract it. Then enter it and run Subfish.exe. (The binaries are signed by RTsoft so Windows shouldn’t give you any trouble running it)

Why I made this

Earlier I was doing some youtube research and needed to look through thousands of videos for spoken words. While I did figure out a way to do it using youtube-dl and text scanning utilities, it was a clunky process and I couldn’t instantly jump to the exact spot in videos to preview video without some shenanigans.

“This is stupid, someone must have made a slick front end for this…” and well, I couldn’t find one, so here we are. As for the name, well, check out my other free utility Toolfish!

The timeline export options were actually added for a friend, but that’s pretty handy too.

Info & Issues

Audio sync problem after importing the EDL timeline into DaVinci Resolve? I think this is a Resolve bug when importing something that has clips with multiple internal timings. No problem – I created a script to fix it, check the ScriptsForDaVinciResolve sub directory. The readme.txt there explains how to copy FixTimelineSync.py into %PROGRAMDATA%\Blackmagic Design\DaVinci Resolve\Fusion\Scripts\Comp so you can run Workspace->Scripts->Comp->FixTimelineSync in resolve.

Is there a way to automatically add date, video counter, video name etc on top of the videos in the exported timeline? Yes, I’ve done it with DaVinci Resolve scripts, hoping to do a tutorial on that later as it’s kind of tricky. The .json metadata we export with each video is useful for this.

It seems to stop after downloading around 4500 subtitles? This seems to be a youtube limitation. One trick is to download again in reverse order, so 9,000+ from a single channel is possible. I think with some changes to optimize youtube-dl I could have it “continue” pulling data in a much smarter way but I haven’t been bothered enough to try yet. (youtube-dl’s current date restriction options just don’t work for right for subtitles, it still checks every video in order)

I’m getting “This browser or app may not be secure.” when I try to login to my Google/Youtube account in the preview window?! Yeah, I started getting that recently too. Luckily it has nothing to do with the actual text/video extraction process, but clip previewing tends to show google ads if not logged on. I think you can fix this by enabling “Less secure apps” but I didn’t actually try it.

OSX/Linux support? Cross-compiling is a problem due to using WebView2 for now, so I guess that’s out. On a side note, in theory this does support Windows-10 ARM based devices too but I don’t have one to test with.

Privacy – On startup, Subfish visits www.rtsoft.com/subfish/checking_for_new_version.php?version=<version #> in its little web-browser thingie which will give a download link if a new version is out. That’s the only communication done with our servers.

Legal – Only use this product if it’s legal to do what you’re doing where you’re doing it. That’s probably good advice for life in general.

To report a bug or feature request – Post here, twitter, or drop me an email